In December 2023, a paper titled "Recommender Systems with Generative Retrieval" [1] was presented by researchers at NeurIPS 2023. This paper introduced a fresh recommender system, referred to as 'TIGER,' which garnered crucial attention due to its innovative approach and state-of-the-art results in sequential advice tasks.

Overview of TIGER

The TIGER architecture incorporates respective cutting-edge techniques and has a number of interesting properties:

- Generative, autoregressive recommender system.

- Transformer-based encoder-decoder architecture.

- Item representations utilizing "Semantic IDs," which are tuples of discrete codes.

- A residual quantization mechanics (RQ-VAE) for item representation.

- Achieved state-of-the-art results on benchmark datasets, surpassing erstwhile models significantly.

Details of TIGER’s Architecture

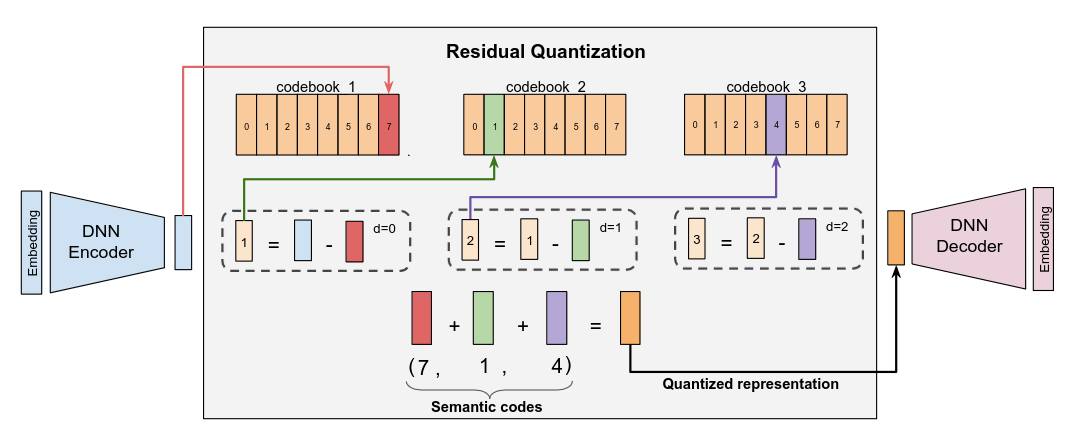

The TIGER model leverages RQ-VAE to make sparse item representations, a method well-suited for transformer decoders where suffix codes depend on prefix codes. The model architecture includes a Bidirectional Transformer Encoder to aggregate historical interactions and an Autoregressive Transformer Decoder to foretell the next item in a sequence.

Figure 1: RQ-VAE: In the figure, the vector output by the DNN Encoder, say r0 (represented by the blue bar), is fed to the quantizer, which works iteratively. First, the closest vector to r0 is found in the first level codebook. Let this closest vector be ec0 (represented by the red bar). Then, the residual mistake is computed as r1 := r0−ec0 . This is fed into the second level of the quantizer, and the process is repeated: The closest vector to r1 is found in the second level, say ec1 (represented by the green bar), and then the second level residual mistake is computed as r2 = r1 − e′ c1 . Then, the process is repeated for a 3rd time on r2. (source)

Figure 1: RQ-VAE: In the figure, the vector output by the DNN Encoder, say r0 (represented by the blue bar), is fed to the quantizer, which works iteratively. First, the closest vector to r0 is found in the first level codebook. Let this closest vector be ec0 (represented by the red bar). Then, the residual mistake is computed as r1 := r0−ec0 . This is fed into the second level of the quantizer, and the process is repeated: The closest vector to r1 is found in the second level, say ec1 (represented by the green bar), and then the second level residual mistake is computed as r2 = r1 − e′ c1 . Then, the process is repeated for a 3rd time on r2. (source)The full architecture looks as follows:

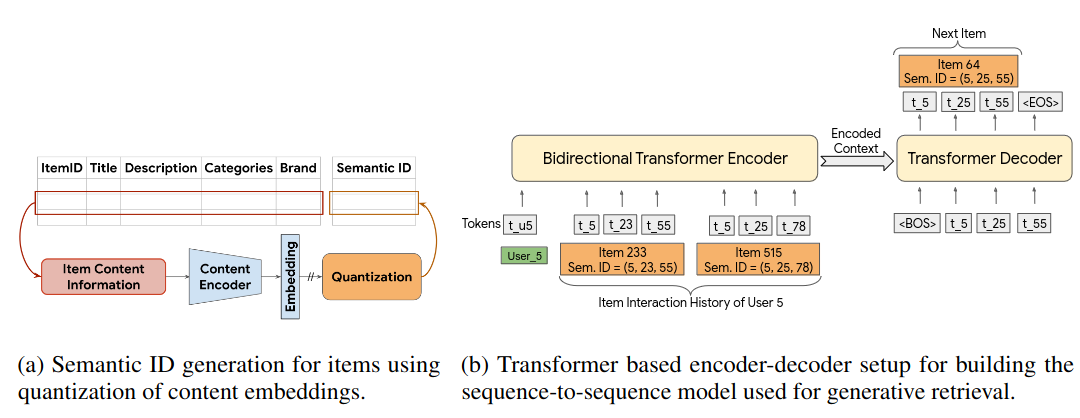

Figure 2: An overview of the modeling approach in TIGER. (source)

Figure 2: An overview of the modeling approach in TIGER. (source)On the left-hand side of TIGER, RQ-VAE item code generation can be seen. The right-hand side is the core neural architecture for autoregressive modeling. It consists of a Bidirectional Transformer Encoder, aggregating historical interactions, and an autoregressive Transformer Decoder, proposing the next item in the series as a series of residual codes.

TIGER’s Benchmark Results

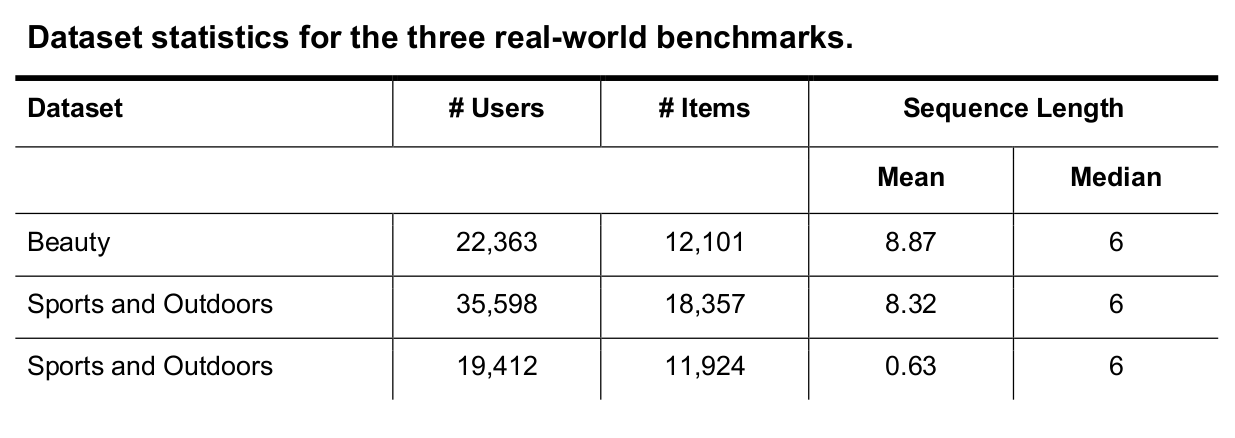

TIGER was benchmarked on respective Amazon datasets:

We usage 3 public benchmarks from the Amazon Product Reviews dataset, containing user reviews and item metadata from May 1996 to July 2014. We usage 3 categories of the Amazon Product Reviews dataset for the sequential advice task: “Beauty”, “Sports and Outdoors”, and “Toys and Games”. This table summarizes the statistic of the datasets. We usage users’ review past to make item sequences sorted by timestamp and filter out users with little than 5 reviews. Following the standard evaluation protocol, we usage the leave-one-out strategy for evaluation. For each item sequence, the last item is utilized for testing, the item before the last is utilized for validation, and the remainder is utilized for training. During training, we limit the number of items in a user’s past to 20. (source)

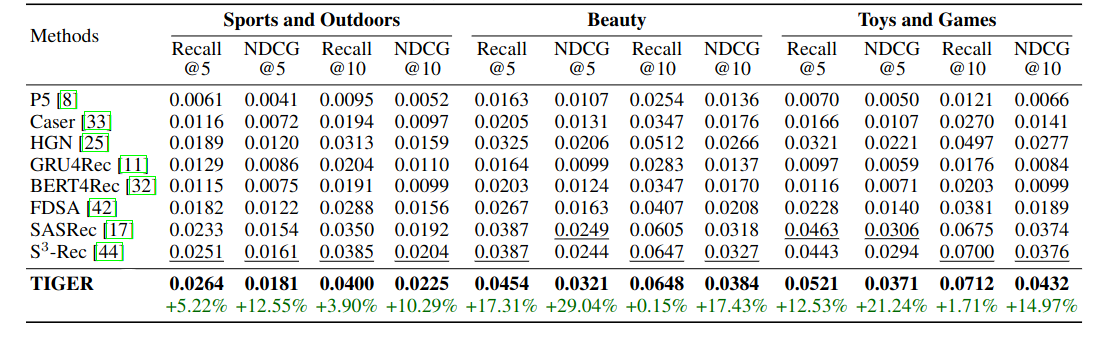

We usage 3 public benchmarks from the Amazon Product Reviews dataset, containing user reviews and item metadata from May 1996 to July 2014. We usage 3 categories of the Amazon Product Reviews dataset for the sequential advice task: “Beauty”, “Sports and Outdoors”, and “Toys and Games”. This table summarizes the statistic of the datasets. We usage users’ review past to make item sequences sorted by timestamp and filter out users with little than 5 reviews. Following the standard evaluation protocol, we usage the leave-one-out strategy for evaluation. For each item sequence, the last item is utilized for testing, the item before the last is utilized for validation, and the remainder is utilized for training. During training, we limit the number of items in a user’s past to 20. (source)TIGER outperformed prior strong baselines on the Amazon datasets used. Results below:

Performance comparison on sequential recommendation. The last row depicts % improvement with TIGER comparative to the best baseline. Bold (underline) are utilized to denote the best (second-best) metric. (source)

Performance comparison on sequential recommendation. The last row depicts % improvement with TIGER comparative to the best baseline. Bold (underline) are utilized to denote the best (second-best) metric. (source)TIGER was able to accomplish crucial improvements of +0.15% to +29.04% over prior state-of-the-art on all metrics on all datasets.

BaseModel vs TIGER Architecture

BaseModel, shares any conceptual similarities with TIGER, specified as autoregressive modeling and sparse integer tuple representation of items. However, there are crucial differences in their implementation:

- Item Representation: BaseModel uses EMDE 2.0 for item representation alternatively of RQ-VAE, with independent item codes alternatively than hierarchical ones.

- Model Architecture: BaseModel employs a proprietary neural network designed to maximize data efficiency and learning speed, differing from TIGER’s transformer architecture.

- Inference Method: BaseModel performs direct predictions, avoiding the computationally costly beam-search decoding utilized in TIGER.

BaseModel vs TIGER Performance

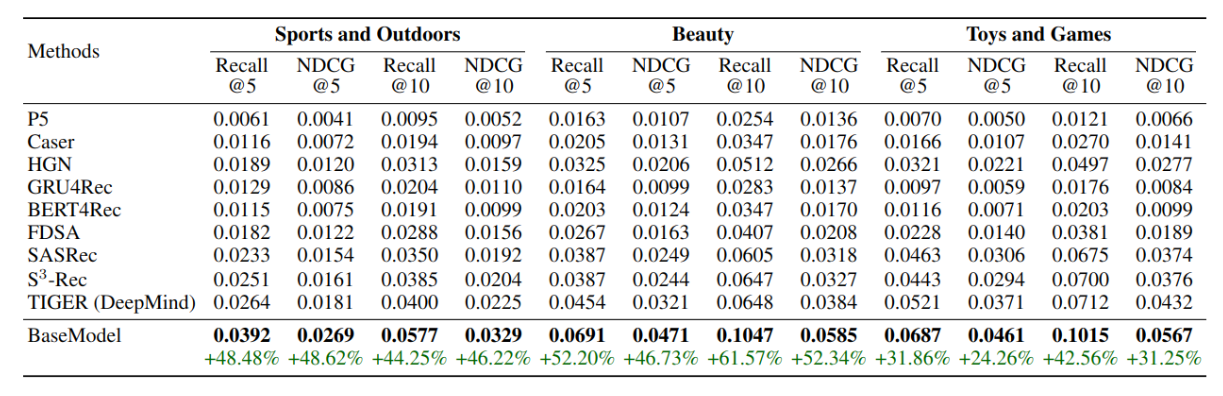

To measure BaseModel against TIGER, we replicated the exact data preparation, training, validation, and investigating protocols described in the TIGER paper. The exact same implementations of callback and NDCG metrics were utilized for consistency.

For comparison of the models a fewer steps were performed:

- Implementation of the exact data preparation protocol as per TIGER paper.

- Implementation of the exact training, validation, and investigating protocol as per TIGER paper.

- Sourcing the exact implementations of callback and NDCG metrics utilized in TIGER and incorporation into BaseModel.

- Model training and evaluation (on all 3 datasets).

The full process took 3 hours from scratch to finish. The parameters of BaseModel were not tuned in any way, and the results look as follows:

Despite limited optimization of BaseModel’s parameters, the results are rather compelling. BaseModel achieved a further +24.26% to +61.57% improvement over TIGER’s results on the same datasets.

Given TIGER’s reliance on RQ-VAE, costly Transformer training, and beam-search inference, it is reasonable to infer that BaseModel’s training and inference processes are orders of magnitude faster.

Conclusion

The comparison between BaseModel and TIGER reveals crucial differences in their architectural choices and performance. While TIGER represents a notable advancement in generative retrieval recommender systems, BaseModel’s approach demonstrates superior efficiency and effectiveness in sequential advice tasks. Further optimization and exploration of BaseModel could lead to even more crucial advancements in the field of recommender systems.

The possible of our methods encourages continued innovation and comparison with leading models in the field to push the boundaries of what behavioral models can achieve.

References

[1] Rajput, Shashank, et al. "Recommender systems with generative retrieval." Advances in Neural Information Processing Systems 36 (2024).